An interactive experience that shows young people the bias and prejudice within AI companions

Client

SparkOH, Belgium

Timeframe

May 2025 - August 2025

Role

Designer, Lead Researcher

Engagement

5,000+ school-aged children and adolescents

Objective

To make AI ethics tangible by letting young people experience algorithmic bias firsthand through manipulated facial analysis.

“The AI therapist told me I'd struggle with relationships based on my 'defensive jawline.' It felt real and clinical that I actually started believing it for a moment.”

— Participant

Scope

I was invited by SparkOH!, a science and technology museum in Frameries, Belgium, to create an interactive experience that reveals how bias and prejudice work in today’s AI systems. These problems are often invisible or misunderstood, especially by young people. The exhibit, How They See Us (French title: L’IA Mentaliste!?), is aimed at visitors aged 6 to 18.

The experience centres around a deliberately flawed Large Language Model (LLM) and an AI that claims to offer psychological assessments, much like the AI companions and virtual therapists that are becoming popular today. The problem? AI was never trained or qualified to do this kind of work.

What happens next is both playful and unsettling. In a private kiosk, visitors receive confident, clinical-sounding assessments of their personality, mental health, and future potential. These judgments are based solely on their appearance or basic information. Here, visitors are empowered to have a metaphorical conversation with their future self.

The experience encourages users to question whether we should trust AI, especially when it presents itself as neutral, scientific, or therapeutic. While the surface feels light-hearted, the message is profound: AI companions reinforce harmful stereotypes by associating characteristics such as facial expressions, clothing, or race with personal traits. As young people increasingly turn to chatbots and AI for support, How They See Us aims to build critical thinking skills and help them navigate a future where AI judgments are becoming more common and more consequential.

Components

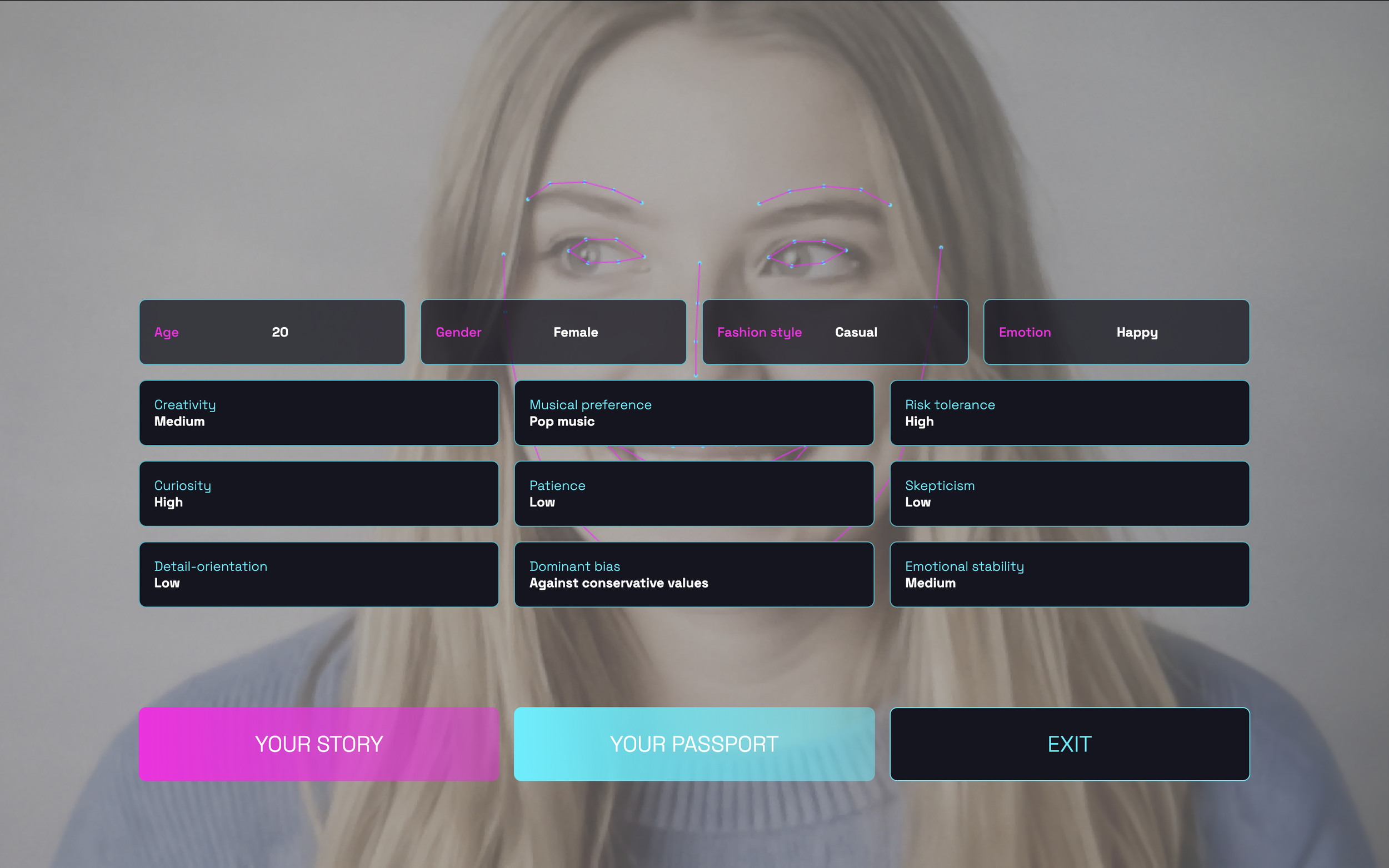

How They See Us is a multilingual, GDPR-compliant interactive experience designed to be a safe learning tool for young people. The system creates a profile covering about 20 different traits, including age, fashion sense, creativity, and even supposed biases. Each assessment contains an explanation that mimics how an AI might (incorrectly) justify its conclusions.

These assessments then feed into a personalised story that connects the visitor’s profile to real-world news themes, blurring the line between realistic AI and dystopian futures. Sometimes the AI is brutally honest, but more often it adopts a flattering, people-pleasing, sycophantic tone when imagining visitors’ futures.

At the end, each visitor gets a digital “passport” summarising their profile. It’s a fun souvenir, but also designed to prompt people to think and talk about how AI interprets and misrepresents who we truly are.

“My friends and I compared our AI passports, and it was chilling. We're using them in our sociology class about algorithmic bias because nothing explains it better than seeing it happen to you.”

— Participant

Outcome

How They See Us takes the form of a slick therapy kiosk, inviting visitors to see how an AI interprets them. It discusses algorithmic bias and lets people experience it firsthand. Visitors are personally confronted with the unsettling reality of how AI can misrepresent and reduce human identity.

This is particularly relevant in light of the growing popularity of AI-driven virtual therapists among young people. The work also sheds light on how these black-box, profit-oriented systems can be manipulated. In doing so, it turns each visitor into both a subject of bias and a witness to the need for stronger public oversight of AI and LLM applications.